Binary Exploitation 101 - ASLR

This blog series is still a work in progress. The content may change without notice.

In this chapter, we’ll learn about ASLR (Address Space Layout Randomization) and its bypass. The materials for this chapter can be found in the chapter_07 folder.

Introduction

As we learned in the previous chapter, SSP is a mitigation that makes it harder to overwrite the return address via a buffer overflow. However, an attacker can bypass this mitigation if they manage to leak the stack canary. So, how can we make attacks even more difficult? Recall that ROP relies on using ROP gadgets within glibc. When writing a payload, an attacker needs to know the addresses of ROP gadgets. If these addresses are hard to predict, an attack becomes much more difficult. This is the idea behind ASLR (Address Space Layout Randomization).

ASLR

ASLR (Address Space Layout Randomization) is a security mechanism that randomizes the base addresses of certain memory regions, such as shared libraries and the stack. This makes the addresses of ROP gadgets change with each execution, making ROP-based attacks more difficult. In previous chapters, we disabled ASLR using the following command:

1

sudo sysctl -w kernel.randomize_va_space=0

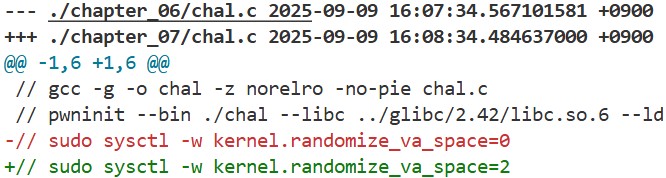

Looking at chal.c in this chapter, the code itself hasn’t changed, but we can see that ASLR is now enabled:

Let’s check how ASLR behaves in GDB. When GDB starts, it disables ASLR by default, so we run set disable-randomization off to enable it:

1

pwndbg -q --ex 'set disable-randomization off' --ex 'b main' --ex 'r' ./chal_patched

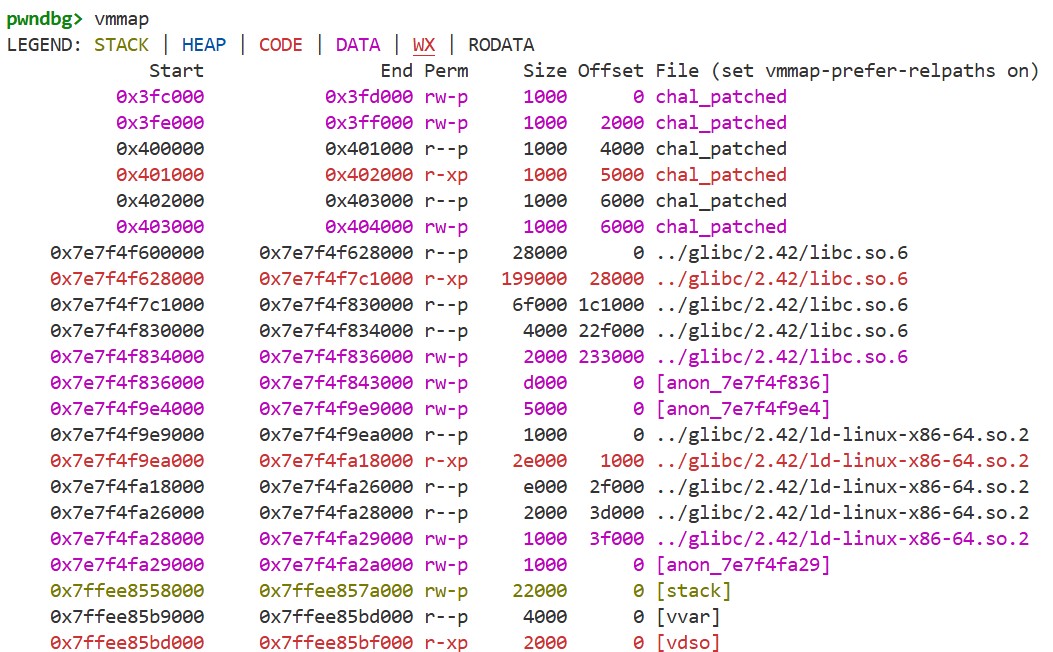

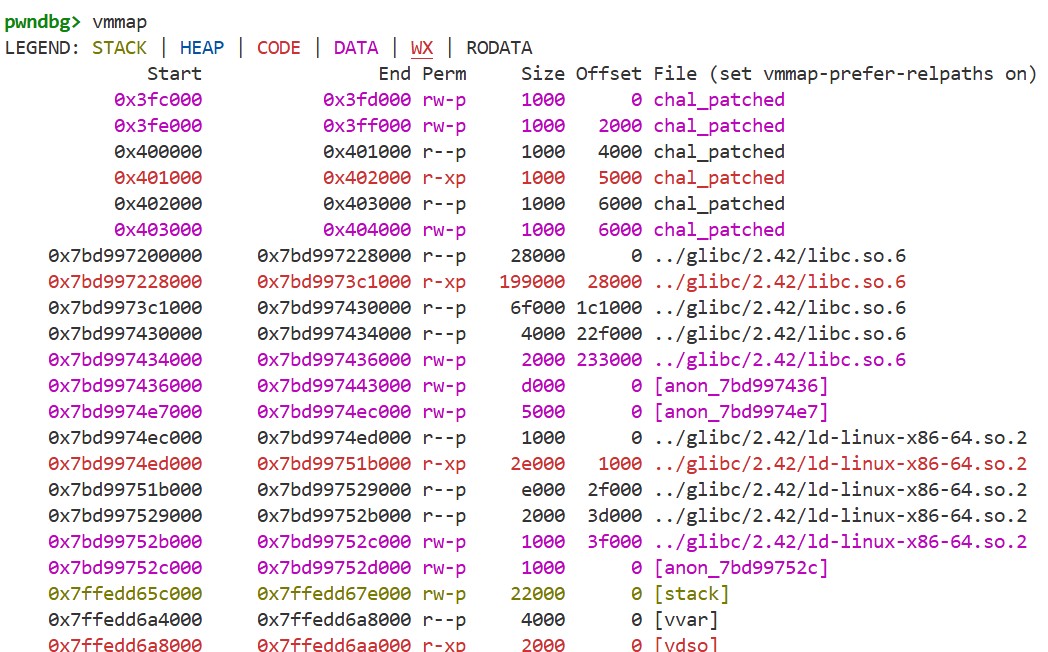

If we run vmmapto see the memory map, it looks like this, for example:

By using r to run the program again and then executing vmmap once more, we get the following:

We can see that the base addresses of glibc, the stack, and other memory regions change with each execution.

Let’s learn how ASLR works by examining the Linux kernel code. In particular, we’ll focus on how the base addresses of shared libraries such as glibc are randomized. ld-linux-x86-64.so.2 loads libc.so.6 into memory by calling mmap. As a result, the kernel’s do_mmap function is invoked, which determines the mapping address by calling __get_unmapped_area:

1

2

3

4

5

6

7

8

unsigned long do_mmap(struct file *file, unsigned long addr,

unsigned long len, unsigned long prot,

unsigned long flags, vm_flags_t vm_flags,

unsigned long pgoff, unsigned long *populate,

struct list_head *uf)

{

...

addr = __get_unmapped_area(file, addr, len, pgoff, flags, vm_flags);

Eventually, the unmapped_area_topdown function is called, and it returns the highest address that satisfies the required conditions within the range [info->low_limit, info->high_limit):

1

2

3

4

5

6

7

8

9

10

11

/**

* unmapped_area_topdown() - Find an area between the low_limit and the

* high_limit with the correct alignment and offset at the highest available

* address, all from @info. Note: current->mm is used for the search.

*

* @info: The unmapped area information including the range [low_limit -

* high_limit), the alignment offset and mask.

*

* Return: A memory address or -ENOMEM.

*/

unsigned long unmapped_area_topdown(struct vm_unmapped_area_info *info)

In the arch_get_unmapped_area_topdown function, info->high_limit is set to the value of mmap_base:

1

2

3

4

5

6

7

8

9

unsigned long

arch_get_unmapped_area_topdown(struct file *filp, unsigned long addr0,

unsigned long len, unsigned long pgoff,

unsigned long flags, vm_flags_t vm_flags)

{

...

info.high_limit = get_mmap_base(0);

...

addr = vm_unmapped_area(&info);

The value of mmap_base is determined by the mmap_base function:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

static unsigned long mmap_base(unsigned long rnd, unsigned long task_size,

struct rlimit *rlim_stack)

{

unsigned long gap = rlim_stack->rlim_cur;

unsigned long pad = stack_maxrandom_size(task_size) + stack_guard_gap;

/* Values close to RLIM_INFINITY can overflow. */

if (gap + pad > gap)

gap += pad;

/*

* Top of mmap area (just below the process stack).

* Leave an at least ~128 MB hole with possible stack randomization.

*/

gap = clamp(gap, SIZE_128M, (task_size / 6) * 5);

return PAGE_ALIGN(task_size - gap - rnd);

}

The argument rnd is set to the value returned by the arch_rnd function:

1

2

3

4

5

6

static unsigned long arch_rnd(unsigned int rndbits)

{

if (!(current->flags & PF_RANDOMIZE))

return 0;

return (get_random_long() & ((1UL << rndbits) - 1)) << PAGE_SHIFT;

}

When ASLR is enabled, the following code in the load_elf_binary function sets current->flags |= PF_RANDOMIZE. As a result, the arch_rnd function above returns a random value:

1

2

3

4

5

6

static int load_elf_binary(struct linux_binprm *bprm)

{

...

const int snapshot_randomize_va_space = READ_ONCE(randomize_va_space);

if (!(current->personality & ADDR_NO_RANDOMIZE) && snapshot_randomize_va_space)

current->flags |= PF_RANDOMIZE;

In summary, when ASLR is enabled, mmap_base varies with each execution, causing the addresses at which shared libraries are loaded to change every time. This is how ASLR works.

ASLR Bypass

When ASLR is enabled, the addresses of ROP gadgets also change with each execution, so we cannot use the exploit from the previous chapter as-is. Then, how can we bypass ASLR and execute a shell?

Remember that ASLR randomizes the base addresses of shared libraries. Only the base address changes and the offsets from the base to the ROP gadgets remain the same. Therefore, if we can somehow leak the base address, we can determine the addresses of the ROP gadgets. The question is: how can we leak the libc base?

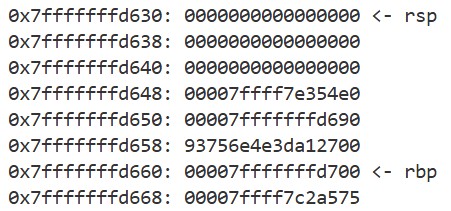

By starting GDB with the following command and running chal_patched, we can see the output of the first dump_stack call. Interestingly, we notice that an address within libc.so.6 is stored at rbp + 0x8:

1

pwndbg -q --ex 'b *0x401230' --ex 'r' ./chal_patched

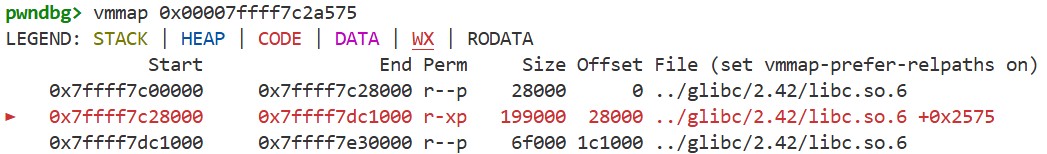

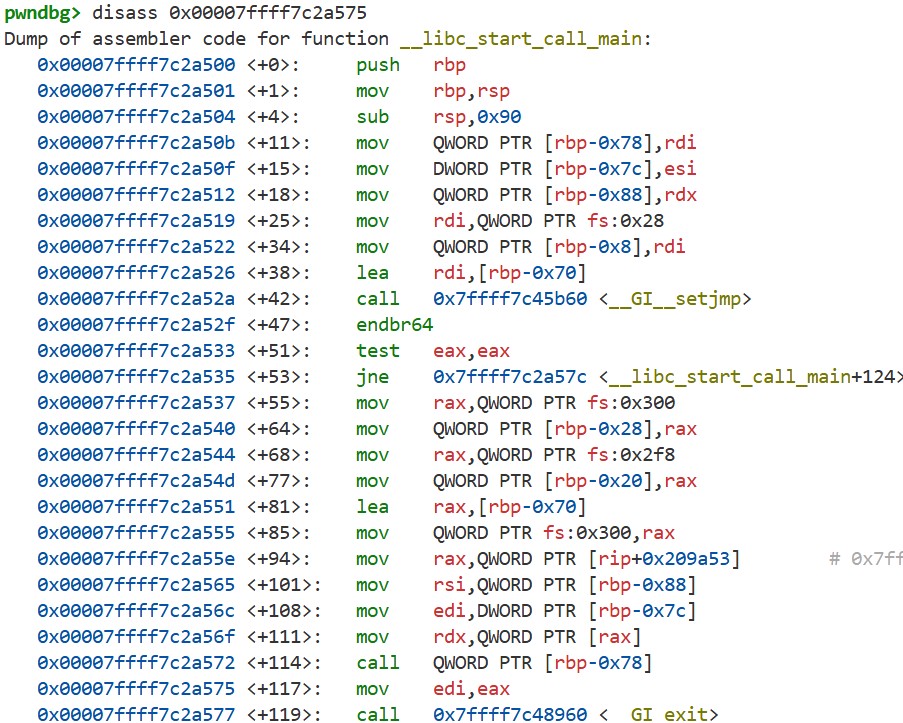

The value stored at rbp + 0x8 is the return address of the main function. Using the disass command, we can see that this address points to the code of the __libc_start_call_main function:

In other words, the main function is called from the __libc_start_call_main function. This can also be confirmed by looking at the glibc source code:

1

2

3

4

5

6

7

8

9

10

11

12

_Noreturn static void

__libc_start_call_main (int (*main) (int, char **, char ** MAIN_AUXVEC_DECL),

int argc, char **argv

#ifdef LIBC_START_MAIN_AUXVEC_ARG

, ElfW(auxv_t) *auxvec

#endif

)

{

...

result = main (argc, argv, __environ MAIN_AUXVEC_PARAM);

...

exit (result);

Even if ASLR is enabled, the offset from the base address does not change, so we can use this value to calculate the base address of glibc.

Exercise

Based on what you have learned so far, write an exploit that bypasses ASLR and launches a shell. Before you start, make sure to execute the following command to enable ASLR (if you are using a Docker container, run it on the host):

1

sudo sysctl -w kernel.randomize_va_space=2

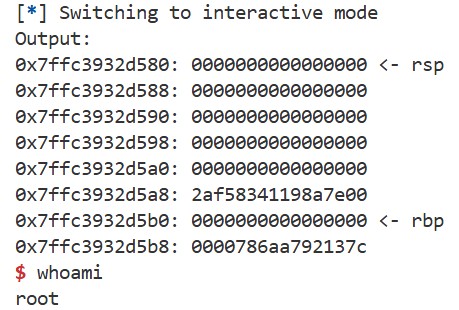

You can use the template, and the following hints may help. If successful, you should be able to launch a shell like this:

If you have any questions, feel free to leave a comment below. You can see my solution here.

Hints

- The base address of glibc can be calculated from the output of the

dump_stackfunction.